Introduction

More and more protocols are being added for the Internet of Things (IoT) as large vendors address the deficiencies of their products. These higher level IoT protocols are suitable for a broad range of applications. For example, MQTT has been used for many years to manage messaging between server applications and has now been updated to address secure small client usage. DDNS has been used to provide browser access to web devices and CoAP has been extended with other protocols to provide security management and more robust operation. All of these protocols can be used for managing and configuring a plethora of home devices. A deeper understanding of these protocols, their security and configuration options and the applications requirements is required to properly select the best protocol for the application at hand.

Knowing the correct protocol or set of protocols for a given application which cover the communication, security, management and scalability is the first design consideration. After this, the best implementation of each of the protocols must be understood. From this understanding, the designer can select the optimal implementation of each protocol for the system and then from these, select the best set of protocol implementations for the system. This decision will be impacted by requirements decisions related to the supporting hardware which has been selected.

The protocol set selection problem is closely tied to the implementation of the protocol, hardware requirements specifications and the additional hardware and software components that support the protocol set. This makes the decision a very complex one. All aspects of deployment, operation, management and security must be considered as part of the protocol selection including the implementation environment and must be done within the requirements specifications.

For the IoT protocol space, standards are not yet converged for particular applications and the market ultimately decides which of these standards are most relevant. This is a problem and an opportunity. The protocol that is selected today may become obsolete in the future and may need to be replaced. Conversely, the protocol selected today could become the standard in the future. As a developer, predicting the converged protocol is usually the prudent path but implementation costs and risk must always be considered. Also using specific features of the hardware and operating system to implement the protocols and then using specific protocol, operating system and hardware features for application implementation can make future migration to a new protocol or porting the application to a completely new environment very difficult.

This article examines the range of protocols available, the specific requirements that drive the features of these protocols and considers the implementation requirements to build a complete system.

Protocols and Vendors

Most higher level IoT protocols were developed by specific vendors which typically promote their own protocol choices, don’t clearly define their assumptions, and ignore the other alternatives. Higher level protocols for IoT do offer choices of different capabilities and features but relying on vendor information to select a specific IoT protocol or protocol set is problematic. Most comparisons which have been produced are insufficient to understand the tradeoffs due to the vendor’s obfuscation and omissions.

IoT protocols are often bound to a business model; for example, Azure-IoT is linked to Microsoft analytics offerings primarily for large enterprises and Thread is linked to a consortium of hardware vendors and Google which want to dominate home automation. Other times these protocols are incomplete and/or used to support existing business models and approaches or they offer a more complete solution but the resource requirements are unacceptable for smaller sensors. In general, the key assumptions behind the use of the protocols are not clearly stated which makes comparison difficult.

The fundamental assumptions associated with IoT applications are:

- various wireless connections will be used,

- devices will range from tiny MCUs to high performance systems with the emphasis on small MCUs,

- security is a core requirement,

- operation may be discontinuous,

- data will be stored in the cloud and may be processed in the cloud,

- connections back to the cloud storage are required,

- and routing of information through wireless and wireline connections to the cloud storage is required.

Assumptions made by the protocol developers in addition to this list require deeper investigation and will strongly influence the features of the protocol they designed. By looking at the key features of these protocols and looking at the implementation requirements, designers can develop a clearer understanding of exactly what is required in both the protocol area and in the supporting features area to improve their designs. Before we look at this, let’s review the protocols in question.

IoT or M2M Protocols

There is a broad set of protocols which are promoted as the silver bullet of IoT communication for the higher level machine to machine (M2M) protocol in the protocol stack. Note that these IoT or M2M protocols focus on the application data transfer and processing although some such as SNMP are focused more on remote node management. The following list summarizes the protocols generally considered.

- Azure-IoT

- CoAP

- Continua – Home Health Devices

- DDS

- DPWS: WS-Discovery, SOAP, WSAddressing, WDSL, & XML Schema

- HTTP/REST

- MQTT and MQTT-SN/S

- SNMP

- Thread

- UPnP

- XMPP

- ZeroMQ

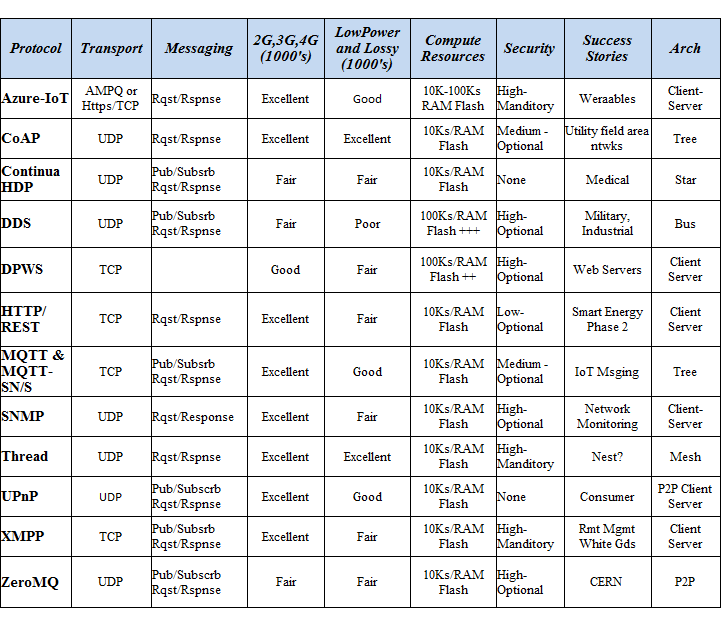

These protocols have their features summarized in the following table. Several key factors related to infrastructure and deployment are considered separately below.

Using a POSIX/Linux API makes implementation of IoT protocols simpler because many of these protocols run above the transport layer. In the case of the Unison OS, it has most IoT protocols off the shelf providing a tiny, fast and simple multi-protocol option.

Key Protocol Features

Communications in the Internet of Things (IoT) is based on the Internet TCP/UDP protocols and the associated Internet protocols for setup which means either UDP datagrams of TCP stream sockets. Small device developer claim that UDP offers large advantages in performance and size which will in turn minimize cost. It is not significant in many instances.

Stream sockets suffer a performance hit but they do guarantee in-order delivery of all data without errors. The performance hit on sending sensor data on an STM32F4 at 167MHz is less than 16.7% (measured with 2KB packets, smaller packets reduce the performance hit). By using stream sockets, standard security protocols can also be used. Similarly, the difference in memory cost for an additional 20K of flash and 8K of RAM to upgrade to TCP is generally small.

Messaging the common IoT approach is very important and many protocols have migrated to a publish subscribe model. With many nodes connecting and disconnecting, and these nodes needing to connect to various applications in the cloud, the publish/subscribe request/response model has an advantage. It responds dynamically to random on/off operation and can support many nodes.

Two protocols: CoAP and Http/REST are both based on request response without a publish and subscribe approach. In the case of CoAP the use of 6loWPAN and the automatic addressing of Ipv6 is used to uniquely identify nodes. In the case of Http/REST the approach is different in that the request can be anything including a request to publish or a request to subscribe so in fact it becomes the general case if designed in this way. These protocols are being merged to provide a complete publish/subscribe request/response model with Thread as an example.

System architectures are varied, including client server, tree or star, bus, and P2P. The majority use client-server but others use bus and P2P approaches. A star is a truncated tree approach. Performance issues exist for these various architectures with the best performance generally found in P2P and bus architectures. Simulation approaches or prototype approaches are preferred early in design to safeguard against surprises.

Scalability depends on adding many nodes in the field, and having the cloud resources easily increased to service these new nodes. The various architectures have different properties. For client server architectures, increasing the pool of available servers is sufficient and easy. For bus and P2P architectures, scale is inherent in the architecture but there is no cloud services. In the case of tree or star connected architectures, there can be issues associated with adding extra leaves on the tree which burdens the communication nodes.

Another aspect of scalability is dealing with a large number of changing nodes and linking these nodes to cloud applications. As discussed, publish/subscribe request/response systems are intended for scalability because they deal with nodes that go off line for a variety of reasons, allows applications to receive specific data when they decide to subscribe and request data resulting in fine data flow control. Less robust approaches don’t scale nearly as well.

Low Power and Lossy Networks have nodes that go on and off. This dynamic behaviour may affect entire sections of the network so protocols are designed for multiple paths dynamic reconfiguration. Specific dynamic routing protocols found in Zigbee, Zigbee IP (using 6loWPAN) and native 6loWPAN ensure that the network adapts. Without these features, dealing with these nodes becomes one of discontinuous operation and makes the resource requirements of the nodes much higher.

Resource requirements are key as application volume increases. Microcontrollers offer intelligence at very low cost, and have the capacity to deal with the issues listed above. Some protocols are simply too resource intensive to be practical on small nodes. There will be limitations around discontinuous operation and big data storage unless significant amounts of serial flash or other storage media are included. As resources are increased, to reduce overall system costs, aggregation nodes are more likely to be added to provide additional shared storage resources.

Interoperability is essential for most devices in the future. Thus far we have seen sets of point solutions but ultimately users want sensors and devices to work together. By using a set of standardized protocols as well as standardized messaging, devices can be separated from the cloud services that support them. This approach could provide complete device interoperability. Also, using intelligent publish subscribe options, different devices could even use the same cloud services, and provide different features. Using an open approach, application standards will emerge, but today, the M2M standards are just emerging and the applications standards are years in the future. All the main protocols are being standardized today.

Security using standard information technology security solutions are the core security mechanisms for most of these protocols which offer security. These security approaches are based on:

- TLS

- IPSec / VPN

- SSH

- SFTP

- Secure bootloader and automatic fallback

- Filtering

- HTTPS

- SNMP v3

- Encryption and decryption

- DTLS (for UDP only security)

As systems will be fielded for many years, design with security as part of the package is essential.

Implementation Requirements

Privacy is an essential implementation requirement. Supported by privacy laws, almost all systems require secure communication to the cloud to ensure personal data cannot be accessed or modified and liabilities are eliminated. Furthermore, the management of devices and the data that appears in the cloud need to be managed separately. Without this feature, user’s critical personal information is not protected properly – available to anyone with management access.

Separation of management and user data is a preferred solution to guarantee privacy for users. By using separate cloud solutions for management and user data this isolation and therefore improved security is provided.

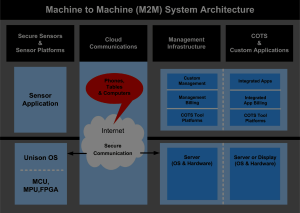

In the system architecture diagram we show the two separate components inside the cloud required for system management and application processing to satisfy privacy laws. Both components may have separate billing options and can run in separate environments. The management station may also include:

- system initialization

- remote field service options (ie field upgrades, reset to default parameters, remote test, …)

- control for billing purposes (account disable, account enable, billing features, …)

- control for theft purposes (the equivalent of bricking the device)

Given this type of architecture, there are additional protocols and programs which should be considered:

- Custom developed management applications on cloud systems.

- SNMP management for collections of sensor nodes.

- Billing integration programs in the cloud.

- Support for discontinuous operation using SQLite running on Unison OS to store and selectively update data to the cloud.

Billing is a critical aspect of commercial systems. Telecoms operators have demonstrated that the monthly pay model is the best revenue choice. In addition, automatic service selection and integration for seamless billing is important. Also credit card dependence creates issues including over the limit issues, expired cards and deleted accounts.

Self Supporting Users are a key to implementation success too. This includes things like remote field service so devices never return to the factory, intelligent or automatic configuration, online help, community help, and very intuitive products are all key.

Application Integration is important too. Today point systems predominate but in the future the key will be making sensors available to a broad set of applications that the user chooses. Accuracy and reliability can substantially influence application results and competition is expected in this area as soon as standard interfaces emerge. Indirect access via a server ensures security, evolution without application changes, and billing control.

Discontinuous Operation and Big Data go hand in hand. With devices connecting and disconnecting randomly, a need to preserve data for the sensors and update the cloud later is required. Storage limitations exist for both power and cost reasons. If some data is critical, it may be saved while other data is discarded. All data might be saved and a selective update to the cloud performed later. Algorithms to process the data can run in either the cloud or the sensors or any intermediate nodes. All of these options present particular challenges to the sensor, cloud, communications and external applications.

Multiple connection sensor access is also a requirement to make sensors truly available to a broad set of applications. This connection will most likely happen through a server to simplify the sensors and eliminate power requirements for duplicate messages.

IoT Protocols for the Unison OS

The Unison RTOS is targeted at small microprocessors and microcontrollers for IoT applications. As such it offers many of the things that you would expect are required. Unison has:

- POSIX APIs

- Extensive Internet protocol support

- All types of wireless support

- Remote field service

- USB

- File systems

- SQLite

- Security modules

and much more. This is in addition to off the shelf support and factory support for the wide set of protocols discussed here.

By providing a complete set of features and modules for IoT development along with a modular architecture, developers can insert their protocols of choice for IoT development. Building protocol gateways is also possible. This approach minimizes risk by eliminating lock in and shortening time to market.

Unison is also very scalable, which allows it to fit into tiny microcontrollers and also provide comprehensive support on powerful microprocessors. The memory footprint is tiny which leads directly to a very fast implementation.

Summary

Many protocols are being touted as ideal Internet of Things (IoT) solutions. Often the correct protocol choices are obscured by vendors with vested interests in their offerings. Users must understand their specific requirements and limitations and have a precise system specification to make sure that the correct set of protocols is chosen for the various management, application, security and communications features and make sure that all implementation specifications are met.

- Internet of Things Requirements and Protocols[1], Embedded Computing, 2015, Kim Rowe

[1]This is an update from the article provided in Embedded Computing.

Kim Rowe

Kim Rowe

Founder, RoweBots Limited

pkr@rowebots.net

Kim is a serial entrepreneur in the areas of computer systems and electronics. With over 30 years in companies doing technology product development, Kim has extensive experience in embedded systems, product development, general management, marketing, sales and finance. For the past decade, Kim has been managing RoweBots, an Internet of Things / Machine to Machine product development company. Kim holds a BESc from The University of Western Ontario, an MBA from The University of Ottawa and an MEng, from Carleton University.